Apple ‘Ferret-UI’ AI Model Revolutionizes Screen Understanding: A Breakthrough Approach

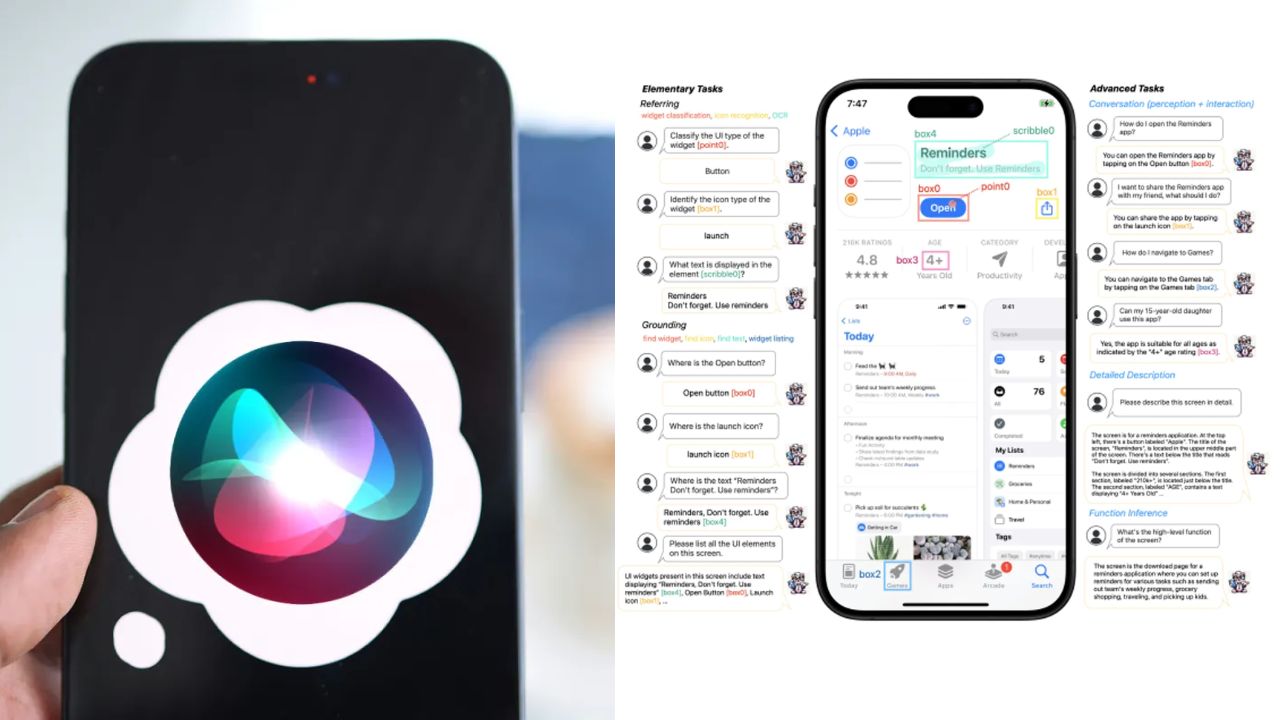

As a Multimodal Large Language Model (MLLM) system, Ferret-UI can perceive images, videos, and other forms of media as well as text.

Apple ‘Ferret-UI’ AI Model Revolutionizes Screen Understanding: Through Siri, Apple is developing an AI assistant that may change how we interact with apps.

As a Multimodal Large Language Model (MLLM) system, Ferret-UI can perceive images, videos, and other forms of media as well as text. Nevertheless, Ferret-UI’s multimodal capabilities have been designed in such a way that it can analyze mobile application screens as well.

This is a big deal considering other LLMs find it difficult to understand content within smartphone displays due to the different aspect ratios and the fact that each app is packed with tiny icons, buttons, and menus. According to the research paper, Ferret-UI has been carefully educated to comprehend this type of user interface. It is trained on a wide variety of user interface tasks including icon recognition, text spotting, and widget listing.

Also Read: Top 6 Entertaining Prompts to Experiment with Copilot AI Chatbot

Apple claims Ferret-UI outperforms both GPT-4V and other UI-focused MLLMs when it comes to understanding smartphone applications.

A model like this could be useful for a variety of purposes. For starters, app developers could use it to test just how intuitive their creations are before releasing them to the public.

💡Imagine a multimodal LLM that can understand your iPhone screen📱? Here it is, we present Ferret-UI, that can do precise referring and grounding on your iPhone screen, and advanced reasoning. Free-form referring in, and boxes out. Ferret itself will also be presented at ICLR. https://t.co/xzOT2fySTw

— Zhe Gan (@zhegan4) April 9, 2024

A feature like this can also greatly enhance accessibility features. A screen reader with artificial intelligence can describe app interfaces and actions far more intelligently than a basic text-to-speech tool for those with visual impairments. In addition to summarizing what’s on a screen, it could list the available actions and even anticipate user actions.

Also Read: Microsoft’s $2.9 Billion Investment in Japan: Boosting AI, Cloud Infrastructure, and Cybersecurity

Among the biggest draws, however, is a supercharged Siri, which could navigate apps and perform actions on your behalf. Using the Uber app, the Rabbit R1 automates the process of hailing a cab. Siri, on the other hand, can perform similar tasks using only voice commands.

Due to the requirement of developers manually plugging into APIs, voice assistants’ scope has thus far been fairly limited. But with tech like this, the scope of such assistants can be significantly widened since they’ll be able to understand apps like humans do and perform actions directly on the screen.

For now, this is all theory, and Apple needs to demonstrate how this breakthrough can be used.

Also Read: Google Unveils Arm-Based Axion Processors for AI Model Training: Everything You Need to Know